Reading Comprehension Assistant (RCA)

A research-backed generative AI system designed to reduce planning workload in multilingual primary classrooms through structured prompt engineering and controlled system design.

Problem Context

Teachers in multilingual primary classrooms face significant workload pressure when creating differentiated reading materials. Producing levelled passages, structured comprehension questions, and printable worksheets is time-intensive and often repetitive.

The RCA was developed to reduce this burden while preserving teacher agency, curriculum alignment, and pedagogical control.

System Overview

The system integrates a large language model through a structured prompt engineering layer to generate:

- Levelled reading passages

- Editable comprehension questions

- Differentiated difficulty variants

- Print-ready worksheet exports (PDF)

Development followed a structured Software Development Life Cycle (SDLC) approach, incorporating iterative refinement based on teacher usability feedback.

Architecture

- Streamlit frontend interface

- LLM API integration via controlled prompt layer

- Parameterised generation logic

- Session-based state management

- Automated PDF export module

Prompt templates were engineered to constrain output structure, enforce readability levels, and maintain instructional clarity. System behaviour was designed to prioritise reliability and explainability over unrestricted generation.

Evaluation & Validation

The RCA was evaluated through structured usability testing with five practising primary educators. Feedback focused on:

- Efficiency improvements in lesson planning

- Clarity and appropriateness of generated outputs

- Curriculum alignment

- Perceived teacher control and trust

Results indicated measurable planning time reductions and strong classroom applicability, validating the system’s design decisions.

System Demonstration

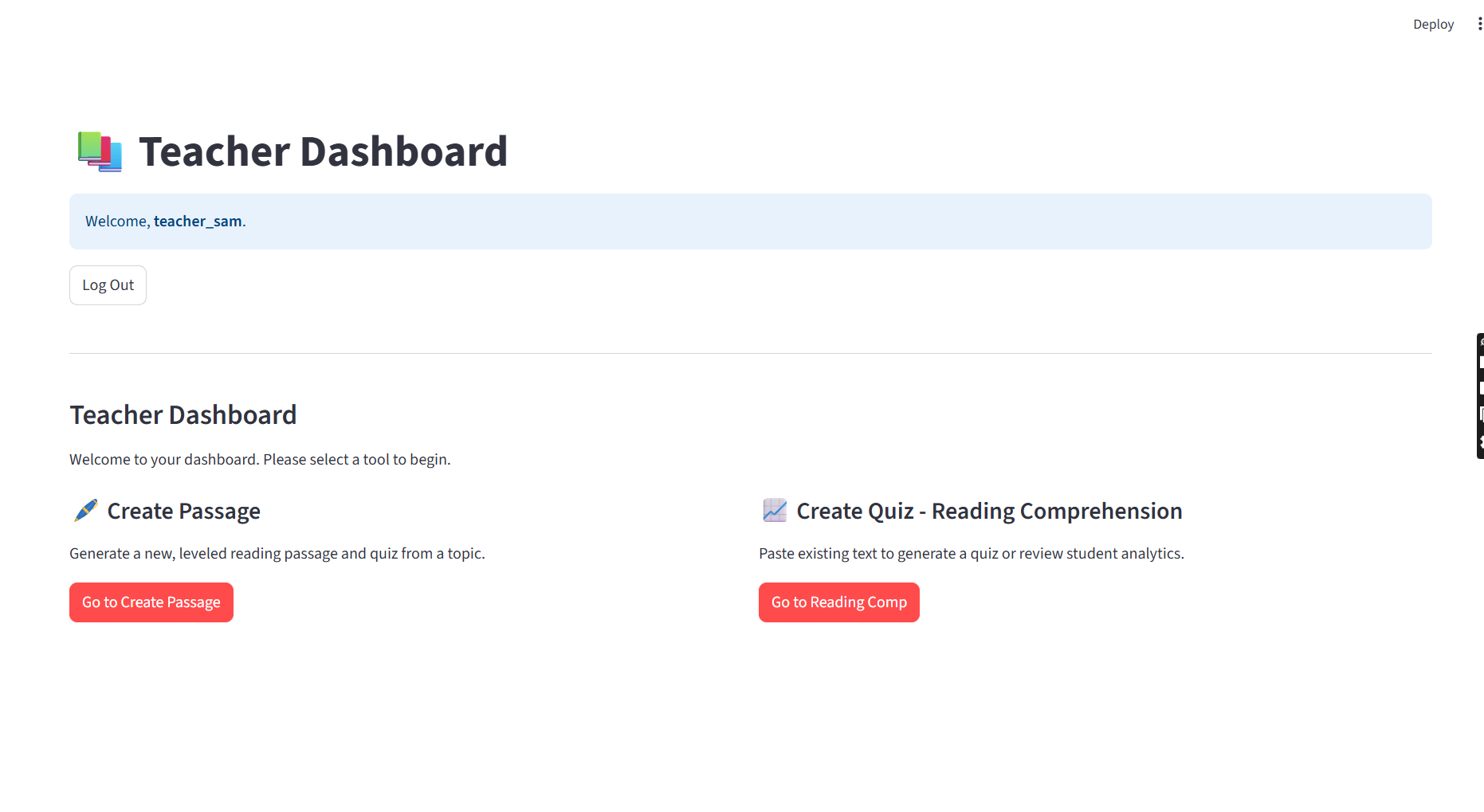

Upon login, teachers access a structured dashboard that centralises passage generation tools and previously created materials. This interface was designed to reflect real classroom workflows rather than experimental AI tooling.

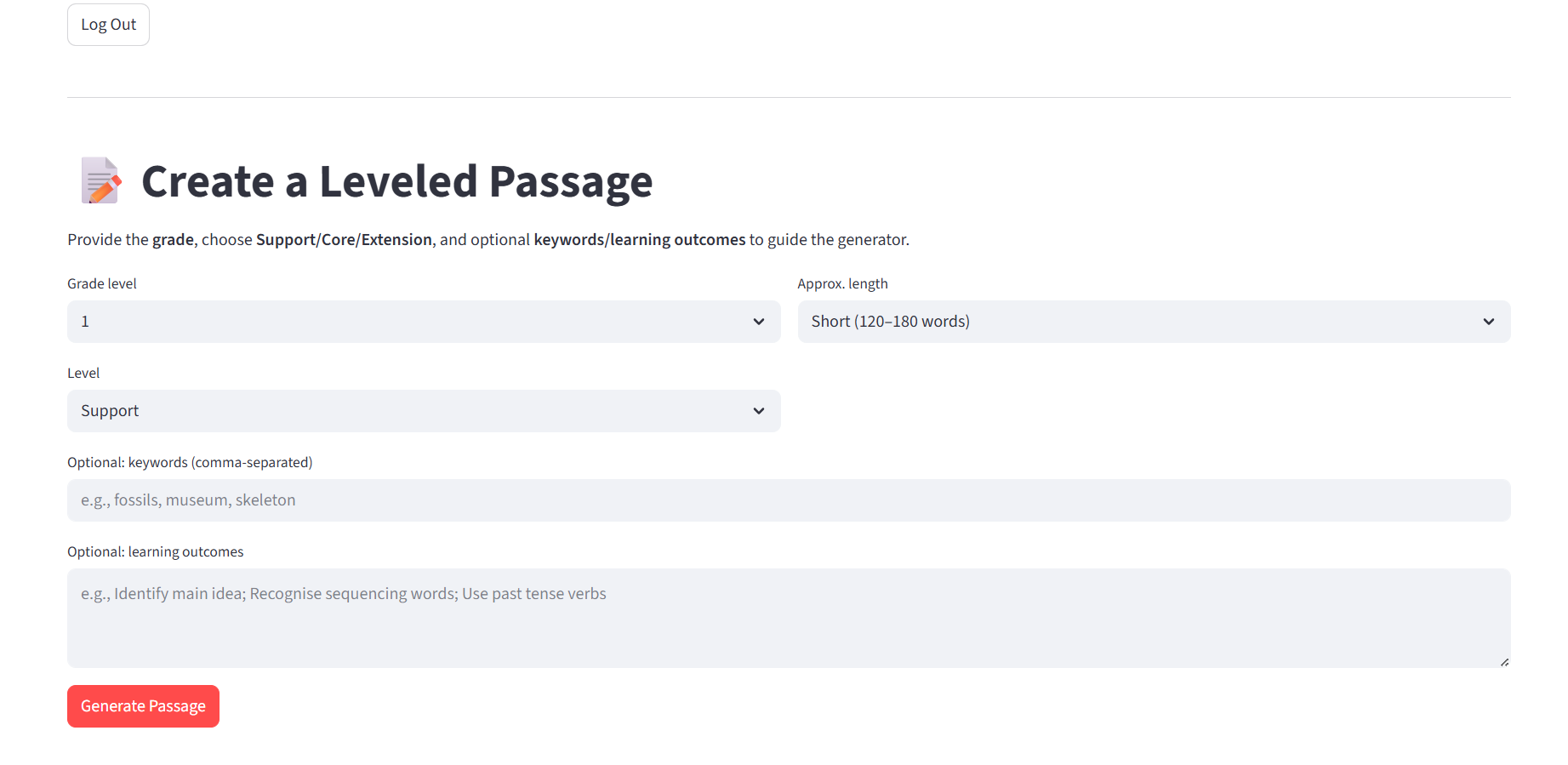

Teachers can configure passage generation using structured parameters, including grade level, differentiation tier (Support, Core, Extension), approximate length, and curriculum-aligned learning objectives.Structured LLM output including levelled passage and comprehension questions. These inputs are translated into a controlled prompt structure that constrains model behaviour while preserving creativity. The goal is not unrestricted generation, but reliable and pedagogically aligned output.

Generated comprehension questions remain fully editable, allowing teachers to refine wording, adjust cognitive demand, or align content more precisely with lesson objectives.This design choice reinforces teacher agency and positions the AI as a support tool rather than an automated decision-maker. The system prioritises collaboration between human expertise and generative assistance.

Live Demonstration

A controlled live demonstration of the system is available upon request. Please get in touch for access.